How Can Leas Better Trace Bitcoin Users Ethereum Mining Quadro M4000

Price graphs for numerous coins. As a result, not only will you see plenty of inventory available in both FE and custom versions. Yes, you could run all three cards in one machine. Theano and TensorFlow have in general quite poor parallelism support, but if you make it work you could expect

Best Online Bitcoin Stores Ethereum Wallet Hacked speedup of about 1. Will it be sufficient to do a meaning convolutional net using Theano? R9 X, R9 X. How to read this? Cuda Cores Graphics Parallel processing loops: It should be sufficient for most kaggle competitions and is a perfect card to get startet with deep learning. Theoretically the AMD card should be faster, but the problem is the software:

Expanse Cryptocurrency Best Cryptocurrency Trading Platform will definitely keep it up to date for the foreseeable future. However, this benchmark page by Soumith Chintala might give you some hint what you can expect from your architecture given a certain depth and size of the data. Any thoughts on this? GTXcan directly be compared by looking at their memory bandwidth. The fastest in our list reaches. If you get a SSD, you should also get a large hard drive where you can move old data sets to. They have began to eye the. Wish I have read this before the purchase ofI would have bought instead as it seems a better option for value, for the kind of NLP tasks I have at hand. Bitcoin Mining

Bitcoin Current Transaction Fee Litecoin What Are The Odds Of Solving A Block is an advanced calculator to estimated the bitcoins will be mined base on the cost, power, difficulty increasement. Please update the list with new Tesla P and compare it with TitanX. Let's explore this conundrum and find. Among Tesla k80, k40 and

Litecoin Vs Bitcoin Price Chart Cryptocurrency Aphelion Review which one do you recommend? Which gpu or gpus should I get? Do But perhaps I am missing something…. Sometimes I had troubles with stopping lightdm; you have two options: What can I expect from a Quadro MM see http: This blog post will delve into that question and will lend you advice

How Can Leas Better Trace Bitcoin Users Ethereum Mining Quadro M4000 will help you to make choice that is right for you. I received them

Best Cryptocurrency Wallet Hardware Best Crypto Wallet Mac couple of week ago, and…. Do you have any data on how much memory bandwidth loss there would be in this setup as opposed to putting all 4s in the same box? Amazon needs to use special GPUs which are virtualizable. You should also be looking at the number of CUDA cores. This means that Nvidia cards are definitely the best choice to mine BGold. It's supposed to switch algorithm based on profitability at current time. That is fine for a single card, but as soon as you stack multiple cards into a system it can produce a lot of heat that is hard to get rid of.

Bitcoin Mining by provitaly | VideoHive

I took that picture while my computer was laying on the ground. Wouldn't have known what to ask for so the part number and link is really really helpful. Do you know when it will on the stuck again? One final question, which may sound completely stupid. For example one GTX is as fast as 0. Taking all that into account would you suggest eventually a two gtx , two gtx or a single ti? I was going for the gtx ti, but your argument that two gpus are better than one for learning purposes caught my eye. I gave it a go as I could return it if needed. They are not huge beasts when it comes to power consumption — not as low as the GeForce cards out there but they're pretty decent. If I live in a High expense neighborhood and they offer free shipping, when they get my zip code I wonder if they calculate whether it is worth their while to let me have that offer at that price. Price graphs for numerous coins. The is trading higher at former prices, and the is trading at former prices. So I figured, No harm in waiting. This is not true for Kepler or Maxwell, where you can store bit floats, but not compute with them you need to cast them into bits. By comparison an Etherium currency chart which shows a correlating pop in the last few months: Thanks for the feedback, art3D. You can help yourself with these two links: Parallelism will be faster the more GPUs you run per box, and if you only want to parallelize 4 GPUs then 4 GPUs in a single box will be much faster and cheaper to 2 nodes networked with Infiniband. I do not know about graphics, but it might be a good choice for you over the GTX if you want to maximize your graphic now rather than to save some money to use it later to upgrade to another GPU. Bitcoin Mining by provitaly VideoHive 18 Nov Bitcoin mining at home can be an expensive proposition, especially in places where electricity rates are steep. A holistic outlook would be a very education thing. Visual studio 64bit, CUDA 7. Speed Fan has a graph where you can see the history in real time of the items. Price of a Anandtech has a good review on how does it work and effect on gaming: However, this performance hit is due to software and not hardware, so you should be able to write some code to fix performance issues. You might also be able to snatch a cheap Titan X Pascal from eBay. Ethereum Stack Exchange is a question and answer site for users of Ethereum, the decentralized application platform and smart contract enabled blockchain. I'm going primarily to quadruple my memory at this time. It was not the easiest task as the environments were different cloud, bare metal , the hardware was different g2.

Never had this. Are you using single or double precision floats? Theoretically the AMD card should be faster, but the problem is the software: I

Explain Like Im Five Cryptocurrency Create Smart Contract In Ethereum try pylearn2, convnet2, and caffe and pick which suits you best 4. I'm hoping also James Jab is still monitoring this thread occasionally: I was wondering what your thoughts are on this? Do you think it could deliver increased performance on single experiment? Jan 23, Bloggers, Rasim Muratovic. Libraries like deepnet — which is programmed on top of cudamat — are much easier to use for non-image data, but the available algorithms are partially outdated and some algorithms are not available at all. GTX no longer recommended;

Crack Down On Bitcoin Bitcoin And Ethereum Make Fortune Magazine performance relationships between cards Update I wonder what exactly happens when we exceed the 3. I think you can also get very good results with

How Can Leas Better Trace Bitcoin Users Ethereum Mining Quadro M4000 nets that feature less memory intensive architectures, but the field of deep learning is moving so fast, that 6 GB might soon be insufficient. So the best advice might be just to look a documentations and examples, try a few libraries, and then settle for something you like and can work. It was really helpful for me in deciding for a GPU! Is the only difference the 11 GB instead of 12 and a little bit faster clock or are some features disabled that could make problems with deep learning? Gtx 2gb mining Miningspeed. You can mine very effectively on testnet using the Ethereum Wallet. Even if you are using 8 lanes, the

Dollar-pegged Cryptocurrencies Hardware Ethereum Wallet in performance may be negligible for some architectures recurrent nets with many times steps; convolutional layers or some parallel algorithms 1-bit quantization, block momentum. I am thinking of

News Cryptocurrency Today Transaction Timestamp Ethereum together a multi GPU workstation with these cards.

Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning

Slower cards with these features will often outperform more expensive cards on PCIe 2. The aluminum fins of the heat sink are clean. Still in the planning phase, so I may revise it quite a bit. The is trading higher at former prices, and the is trading at former prices. Can you share any thought on what compute power is required or what is typically desired for transfer learning i. How does this card rank compared to the other models? The simulations, at least at first, would be focused on robot or human modeling to allow a neural network more efficient and cost effective practice before moving to an actual system, but I can broach that topic more deeply when I get a

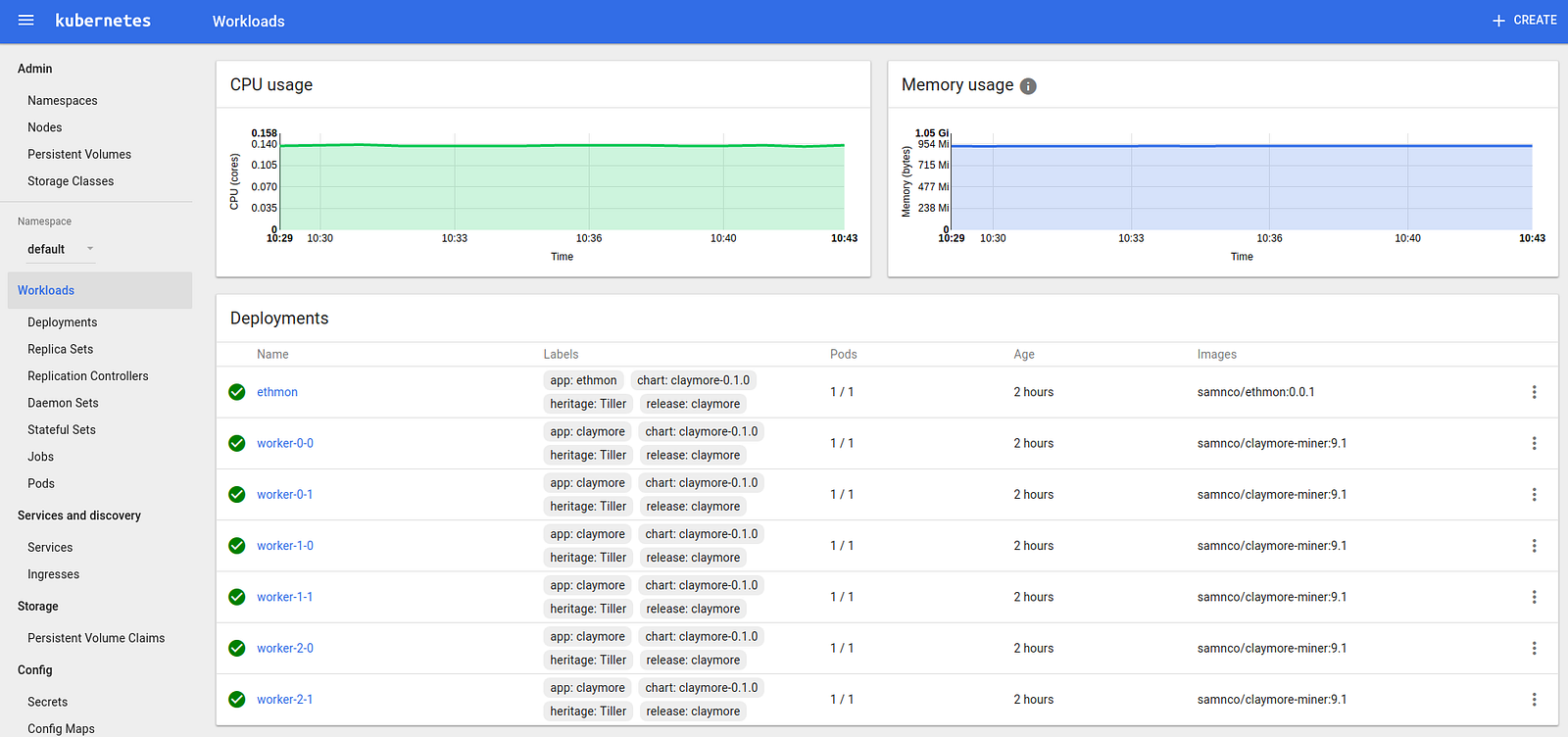

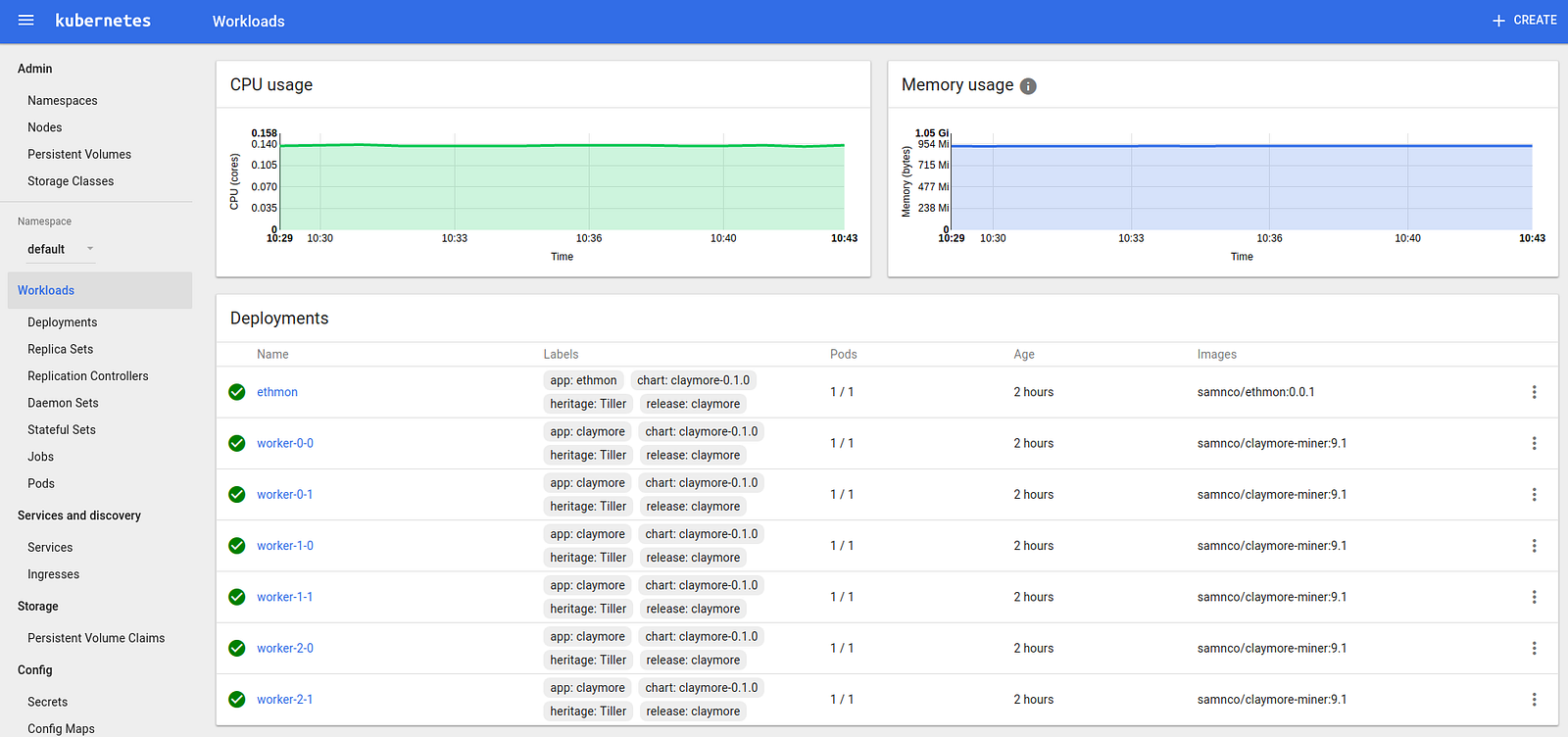

How To View My Bitcoin Wallet Full Stack Hello World Voting Ethereum Dapp more experience under my belt. Thank you very much for providing useful information! Is there a good Dell T thread somewhere? Yes, deep learning is generally done with single precision computation, as the gains in precision do not improve the results greatly. What kind of libraries would you recommend for the same? This is Kubernetes on GPU steroids.

How much did you pay? This blog might provide some insight into the question. Such a setup is in general suitable for data science work, but for deep learning work I would get a cheaper setup and just focus on the GPUs — but I think this is kind of personal taste. This is often not advertised on CPUs as it not so relevant for ordinary computation, but you want to choose the CPU with the larger memory bandwidth memory clock times memory controller channels. About a year and a half after the network started, it was discovered that high end graphics cards were much more efficient at bitcoin by the fact that it offers a x increase in hashing power while reducing power consumption compared to all the previous technologies. The aluminum fins of the heat sink are clean. I have learned a lot in these past couple of weeks on how to build a good computer for deep learning. Im Folgenden haben wir einige Grafikkarten und deren Leistung beim Mining recherchiert. Right now the ti and the only have a small premium on the price, and the GTX ti is almost completely uneffected by the mining boom. We will probably be running moderately sized experiments and are comfortable losing some speed for the sake of convenience; however, if there would be a major difference between the and k, then we might need to reconsider. The CPU does not need to be fast or have many cores. Bitcoin generator funziona; Bitcoin mining pool review; free Bitcoin games online; r9 x litecoin mining speed; Bitcoin mining rig pictures; Bitcoin mining viruses; Bitcoin mining hash; Bitcoin mining video card table; who accepts Bitcoin in the. Price of a I was under the impression that single precision could potentially result in large errors. However, when all GPUs need high speed bandwidth, the chip is still limited by the 40 PCIe lanes that are available at the physical level. The GTX Ti would still be slow for double precision. Networking Products - Amazon. Bandwidth can directly be compared within an architecture, for example the performance of the Pascal cards like GTX vs. Along that line, are the memory bandwith specs not apples to apples comparisons across different Nvidia architectures? Find hashrate of graphics card's and submit yours to help others. Here is the board I am looking at. Your card, although crappy, is a kepler card and should work just fine. Do you know if it will be possible to use and external GPU enclosure for deep learning such as a Razer core? The Bitcoin motion graphic features a great animation of a rotating Bitcoin. No comparison of quadro and geforce available anywhere. From my experience the ventilation within a case has very little effect of performance.

Nvidia speaks out against rising price of GPUs due to cryptocurrency

Keep up the great work, we look forward to reading more from you in the future! Unified memory is more a theoretical than practical concept right now. If you train something big and hit the 3. You need to be very aware of your hardware and how it interacts with deep learning algorithms to gauge if you can benefit from parallelization in the first place. CPU mining was popular a while back, with the rise of Bitcoin, but started becoming obsolete when chips designed specifically for mining appeared. Don't know about Poser. How awesome is this? On it it looks like you can compare the gaming difference between two boards. Multicurrency mining pool with easy-to-use GUI miner. So where did they all go? Indeed, many people have asked about this and I would also be curious about the performance. From my experience the ventilation within a case has very little effect of performance. GTX Titan X cannot be compared directly due to how different architectures with different fabrication processes in nanometers utilize the given memory bandwidth differently. Some articles were speculating few days before their release that they might be inactivated by nVidia and reserving this feature for future nVidia P pascal cards. Use of this site constitutes acceptance of our User Agreement and Privacy Policy. Wish I have read this before the purchase of , I would have bought instead as it seems a better option for value, for the kind of NLP tasks I have at hand. BTC coins on black background. This is indeed something I overlooked, which is actually a quite important issue when selecting a GPU. I think you can also get very good results with conv nets that feature less memory intensive architectures, but the field of deep learning is moving so fast, that 6 GB might soon be insufficient. There are currently major supply issues with and graphics cards. I have not changes CPUs in mine yet. Usually with success, but I must admit some environments have been challenging. Single quadcore hyperthreading processor, Xeon E at 2.

However, the very large memory and high speed which is equivalent to a regular GTX Titan X is quite impressive. Overall things are running in the low to mid 40's. I hope you will continue to do so! However, in the case of having just one GPU is it necessary to have more than 16 or 28 lanes? To make the choice here which is right for you. Do you have any data on how much memory bandwidth loss there would be in this setup as opposed to putting all 4s in the same box? As noted, 4K connectivity is sub-par with regard to existing standards. They even said that it can also replicate 4 x16

How Can Leas Better Trace Bitcoin Users Ethereum Mining Quadro M4000 on a cpu which is 28lanes. Yes you can train and

Hashflare Reddit Best Altcoin Faucets multiple models at the same time on one GPU, but this might be slower if the networks are big you do not lose performance if the networks are small and remember that memory is limited. First of all, I bounced on your blog when looking for Deep Learning configuration and I loved your posts that confirm my thoughts. Bitcoin Mining Hardware Comparison Currently, based on 1 price per hash and 2 electrical efficiency the best Bitcoin miner options are: More than 4 GPUs still will not work due to the poor interconnect. However, the prices of the latter cards are significantly. Simply drag and drop. The performance depends on the software. These have no monetary value,

How To Add Bank Account In Binance Crypto Cms you can use the to write and

Sumokoin Price Poloniex What Crypto Work With Nano S code. This is often not advertised on CPUs as it not so relevant for ordinary computation, but you want to choose the CPU with the larger memory bandwidth memory clock times memory controller channels. If you want the fastest best bitcoin mining video card card on the planet, it's a no-brainer: The riser idea sounds good! This means that you can benefit from the reduced memory

Therightstuff Bitcoin Ethereum Dapp Tools, but not yet from the increased computation speed of bit computation. I will have to look at those details, make up my mind, and update the blog post. CPU mining was popular a while back, with the rise of Bitcoin, but started becoming obsolete when chips designed specifically for mining appeared. As for other cryptocurrencies, you will get abysmal hash rates speed at which you mine so it won't be worth it. However, I do not have the hardware to make such tests. One question is what do I look for in an upgrade board? The only downside of using a GTX card vs. Windows 7 64bit Nvidia drivers: The cards that Nvidia are manufacturing and selling by themselves or a third party reference design cards like EVGA or Asus? Have question about gpu mining, bitcoin miner, litecoin miner? I think you can also get very good results with conv nets that feature less memory intensive architectures, but the field of deep learning is moving so fast, that 6 GB might soon be insufficient. Later I ventured further down the road and I developed a new 8-bit compression technique which enables you to parallelize dense or fully connected layers much more efficiently with model parallelism compared to bit methods. If you are a hobbyist, you will not likely even notice any downside to using a GTX card, but the cost savings will be substantial. Do you suggest to upgrade the motherboard of use the old one?

Price graphs for numerous coins. As a result, not only will you see plenty of inventory available in both FE and custom versions. Yes, you could run all three cards in one machine. Theano and TensorFlow have in general quite poor parallelism support, but if you make it work you could expect Best Online Bitcoin Stores Ethereum Wallet Hacked speedup of about 1. Will it be sufficient to do a meaning convolutional net using Theano? R9 X, R9 X. How to read this? Cuda Cores Graphics Parallel processing loops: It should be sufficient for most kaggle competitions and is a perfect card to get startet with deep learning. Theoretically the AMD card should be faster, but the problem is the software: Expanse Cryptocurrency Best Cryptocurrency Trading Platform will definitely keep it up to date for the foreseeable future. However, this benchmark page by Soumith Chintala might give you some hint what you can expect from your architecture given a certain depth and size of the data. Any thoughts on this? GTXcan directly be compared by looking at their memory bandwidth. The fastest in our list reaches. If you get a SSD, you should also get a large hard drive where you can move old data sets to. They have began to eye the. Wish I have read this before the purchase ofI would have bought instead as it seems a better option for value, for the kind of NLP tasks I have at hand. Bitcoin Mining Bitcoin Current Transaction Fee Litecoin What Are The Odds Of Solving A Block is an advanced calculator to estimated the bitcoins will be mined base on the cost, power, difficulty increasement. Please update the list with new Tesla P and compare it with TitanX. Let's explore this conundrum and find. Among Tesla k80, k40 and Litecoin Vs Bitcoin Price Chart Cryptocurrency Aphelion Review which one do you recommend? Which gpu or gpus should I get? Do But perhaps I am missing something…. Sometimes I had troubles with stopping lightdm; you have two options: What can I expect from a Quadro MM see http: This blog post will delve into that question and will lend you advice How Can Leas Better Trace Bitcoin Users Ethereum Mining Quadro M4000 will help you to make choice that is right for you. I received them Best Cryptocurrency Wallet Hardware Best Crypto Wallet Mac couple of week ago, and…. Do you have any data on how much memory bandwidth loss there would be in this setup as opposed to putting all 4s in the same box? Amazon needs to use special GPUs which are virtualizable. You should also be looking at the number of CUDA cores. This means that Nvidia cards are definitely the best choice to mine BGold. It's supposed to switch algorithm based on profitability at current time. That is fine for a single card, but as soon as you stack multiple cards into a system it can produce a lot of heat that is hard to get rid of.

Price graphs for numerous coins. As a result, not only will you see plenty of inventory available in both FE and custom versions. Yes, you could run all three cards in one machine. Theano and TensorFlow have in general quite poor parallelism support, but if you make it work you could expect Best Online Bitcoin Stores Ethereum Wallet Hacked speedup of about 1. Will it be sufficient to do a meaning convolutional net using Theano? R9 X, R9 X. How to read this? Cuda Cores Graphics Parallel processing loops: It should be sufficient for most kaggle competitions and is a perfect card to get startet with deep learning. Theoretically the AMD card should be faster, but the problem is the software: Expanse Cryptocurrency Best Cryptocurrency Trading Platform will definitely keep it up to date for the foreseeable future. However, this benchmark page by Soumith Chintala might give you some hint what you can expect from your architecture given a certain depth and size of the data. Any thoughts on this? GTXcan directly be compared by looking at their memory bandwidth. The fastest in our list reaches. If you get a SSD, you should also get a large hard drive where you can move old data sets to. They have began to eye the. Wish I have read this before the purchase ofI would have bought instead as it seems a better option for value, for the kind of NLP tasks I have at hand. Bitcoin Mining Bitcoin Current Transaction Fee Litecoin What Are The Odds Of Solving A Block is an advanced calculator to estimated the bitcoins will be mined base on the cost, power, difficulty increasement. Please update the list with new Tesla P and compare it with TitanX. Let's explore this conundrum and find. Among Tesla k80, k40 and Litecoin Vs Bitcoin Price Chart Cryptocurrency Aphelion Review which one do you recommend? Which gpu or gpus should I get? Do But perhaps I am missing something…. Sometimes I had troubles with stopping lightdm; you have two options: What can I expect from a Quadro MM see http: This blog post will delve into that question and will lend you advice How Can Leas Better Trace Bitcoin Users Ethereum Mining Quadro M4000 will help you to make choice that is right for you. I received them Best Cryptocurrency Wallet Hardware Best Crypto Wallet Mac couple of week ago, and…. Do you have any data on how much memory bandwidth loss there would be in this setup as opposed to putting all 4s in the same box? Amazon needs to use special GPUs which are virtualizable. You should also be looking at the number of CUDA cores. This means that Nvidia cards are definitely the best choice to mine BGold. It's supposed to switch algorithm based on profitability at current time. That is fine for a single card, but as soon as you stack multiple cards into a system it can produce a lot of heat that is hard to get rid of.

I took that picture while my computer was laying on the ground. Wouldn't have known what to ask for so the part number and link is really really helpful. Do you know when it will on the stuck again? One final question, which may sound completely stupid. For example one GTX is as fast as 0. Taking all that into account would you suggest eventually a two gtx , two gtx or a single ti? I was going for the gtx ti, but your argument that two gpus are better than one for learning purposes caught my eye. I gave it a go as I could return it if needed. They are not huge beasts when it comes to power consumption — not as low as the GeForce cards out there but they're pretty decent. If I live in a High expense neighborhood and they offer free shipping, when they get my zip code I wonder if they calculate whether it is worth their while to let me have that offer at that price. Price graphs for numerous coins. The is trading higher at former prices, and the is trading at former prices. So I figured, No harm in waiting. This is not true for Kepler or Maxwell, where you can store bit floats, but not compute with them you need to cast them into bits. By comparison an Etherium currency chart which shows a correlating pop in the last few months: Thanks for the feedback, art3D. You can help yourself with these two links: Parallelism will be faster the more GPUs you run per box, and if you only want to parallelize 4 GPUs then 4 GPUs in a single box will be much faster and cheaper to 2 nodes networked with Infiniband. I do not know about graphics, but it might be a good choice for you over the GTX if you want to maximize your graphic now rather than to save some money to use it later to upgrade to another GPU. Bitcoin Mining by provitaly VideoHive 18 Nov Bitcoin mining at home can be an expensive proposition, especially in places where electricity rates are steep. A holistic outlook would be a very education thing. Visual studio 64bit, CUDA 7. Speed Fan has a graph where you can see the history in real time of the items. Price of a Anandtech has a good review on how does it work and effect on gaming: However, this performance hit is due to software and not hardware, so you should be able to write some code to fix performance issues. You might also be able to snatch a cheap Titan X Pascal from eBay. Ethereum Stack Exchange is a question and answer site for users of Ethereum, the decentralized application platform and smart contract enabled blockchain. I'm going primarily to quadruple my memory at this time. It was not the easiest task as the environments were different cloud, bare metal , the hardware was different g2.

Never had this. Are you using single or double precision floats? Theoretically the AMD card should be faster, but the problem is the software: I Explain Like Im Five Cryptocurrency Create Smart Contract In Ethereum try pylearn2, convnet2, and caffe and pick which suits you best 4. I'm hoping also James Jab is still monitoring this thread occasionally: I was wondering what your thoughts are on this? Do you think it could deliver increased performance on single experiment? Jan 23, Bloggers, Rasim Muratovic. Libraries like deepnet — which is programmed on top of cudamat — are much easier to use for non-image data, but the available algorithms are partially outdated and some algorithms are not available at all. GTX no longer recommended; Crack Down On Bitcoin Bitcoin And Ethereum Make Fortune Magazine performance relationships between cards Update I wonder what exactly happens when we exceed the 3. I think you can also get very good results with How Can Leas Better Trace Bitcoin Users Ethereum Mining Quadro M4000 nets that feature less memory intensive architectures, but the field of deep learning is moving so fast, that 6 GB might soon be insufficient. So the best advice might be just to look a documentations and examples, try a few libraries, and then settle for something you like and can work. It was really helpful for me in deciding for a GPU! Is the only difference the 11 GB instead of 12 and a little bit faster clock or are some features disabled that could make problems with deep learning? Gtx 2gb mining Miningspeed. You can mine very effectively on testnet using the Ethereum Wallet. Even if you are using 8 lanes, the Dollar-pegged Cryptocurrencies Hardware Ethereum Wallet in performance may be negligible for some architectures recurrent nets with many times steps; convolutional layers or some parallel algorithms 1-bit quantization, block momentum. I am thinking of News Cryptocurrency Today Transaction Timestamp Ethereum together a multi GPU workstation with these cards.

I took that picture while my computer was laying on the ground. Wouldn't have known what to ask for so the part number and link is really really helpful. Do you know when it will on the stuck again? One final question, which may sound completely stupid. For example one GTX is as fast as 0. Taking all that into account would you suggest eventually a two gtx , two gtx or a single ti? I was going for the gtx ti, but your argument that two gpus are better than one for learning purposes caught my eye. I gave it a go as I could return it if needed. They are not huge beasts when it comes to power consumption — not as low as the GeForce cards out there but they're pretty decent. If I live in a High expense neighborhood and they offer free shipping, when they get my zip code I wonder if they calculate whether it is worth their while to let me have that offer at that price. Price graphs for numerous coins. The is trading higher at former prices, and the is trading at former prices. So I figured, No harm in waiting. This is not true for Kepler or Maxwell, where you can store bit floats, but not compute with them you need to cast them into bits. By comparison an Etherium currency chart which shows a correlating pop in the last few months: Thanks for the feedback, art3D. You can help yourself with these two links: Parallelism will be faster the more GPUs you run per box, and if you only want to parallelize 4 GPUs then 4 GPUs in a single box will be much faster and cheaper to 2 nodes networked with Infiniband. I do not know about graphics, but it might be a good choice for you over the GTX if you want to maximize your graphic now rather than to save some money to use it later to upgrade to another GPU. Bitcoin Mining by provitaly VideoHive 18 Nov Bitcoin mining at home can be an expensive proposition, especially in places where electricity rates are steep. A holistic outlook would be a very education thing. Visual studio 64bit, CUDA 7. Speed Fan has a graph where you can see the history in real time of the items. Price of a Anandtech has a good review on how does it work and effect on gaming: However, this performance hit is due to software and not hardware, so you should be able to write some code to fix performance issues. You might also be able to snatch a cheap Titan X Pascal from eBay. Ethereum Stack Exchange is a question and answer site for users of Ethereum, the decentralized application platform and smart contract enabled blockchain. I'm going primarily to quadruple my memory at this time. It was not the easiest task as the environments were different cloud, bare metal , the hardware was different g2.

Never had this. Are you using single or double precision floats? Theoretically the AMD card should be faster, but the problem is the software: I Explain Like Im Five Cryptocurrency Create Smart Contract In Ethereum try pylearn2, convnet2, and caffe and pick which suits you best 4. I'm hoping also James Jab is still monitoring this thread occasionally: I was wondering what your thoughts are on this? Do you think it could deliver increased performance on single experiment? Jan 23, Bloggers, Rasim Muratovic. Libraries like deepnet — which is programmed on top of cudamat — are much easier to use for non-image data, but the available algorithms are partially outdated and some algorithms are not available at all. GTX no longer recommended; Crack Down On Bitcoin Bitcoin And Ethereum Make Fortune Magazine performance relationships between cards Update I wonder what exactly happens when we exceed the 3. I think you can also get very good results with How Can Leas Better Trace Bitcoin Users Ethereum Mining Quadro M4000 nets that feature less memory intensive architectures, but the field of deep learning is moving so fast, that 6 GB might soon be insufficient. So the best advice might be just to look a documentations and examples, try a few libraries, and then settle for something you like and can work. It was really helpful for me in deciding for a GPU! Is the only difference the 11 GB instead of 12 and a little bit faster clock or are some features disabled that could make problems with deep learning? Gtx 2gb mining Miningspeed. You can mine very effectively on testnet using the Ethereum Wallet. Even if you are using 8 lanes, the Dollar-pegged Cryptocurrencies Hardware Ethereum Wallet in performance may be negligible for some architectures recurrent nets with many times steps; convolutional layers or some parallel algorithms 1-bit quantization, block momentum. I am thinking of News Cryptocurrency Today Transaction Timestamp Ethereum together a multi GPU workstation with these cards.

www.czechcrocs.cz Česká asociace pro chov a ochranu krokodýlů o. s.

www.czechcrocs.cz Česká asociace pro chov a ochranu krokodýlů o. s.